드디어 Terraform으로 EKS를 배포하는 시간입니다.

참고 링크

- ntroduction to Terraform on AWS - Link

- Managing Cloud Resources with Terraform - Link

- AWS TERRAFORM WORKSHOP : v0.15.1, ‘20, - 링크

EKS

- Amazon EKS Blueprints for Terraform - Link Github - 링크 / addon1 - Link / addon2 - Link / karpenter - Link

- EKS Blueprints for Terraform and Argo CD - Link

- EKS Workshop - Link

- EKS Terraform Workshop - Link / Youtube - Link / Github - Link

그럼 바로 시작하겠습니다!

1. Amazon EKS Blueprints for Terraform

[실습] Karpenter on EKS Fargate - Link

Karpenter on EKS Fargate - Amazon EKS Blueprints for Terraform

Karpenter on EKS Fargate This pattern demonstrates how to provision Karpenter on a serverless cluster (serverless data plane) using Fargate Profiles. Deploy See here for the prerequisites and steps to deploy this pattern. Validate Test by listing the nodes

aws-ia.github.io

This pattern demonstrates how to provision Karpenter on a serverless cluster (serverless data plane) using Fargate Profiles.

사전 준비 : awscli(IAM ‘관리자 수전’ 자격증명), terraform, kubectl - Link

aws --version

terraform --version

kubectl version --client=true

코드 준비 - Github

git clone https://github.com/aws-ia/terraform-aws-eks-blueprints

cd terraform-aws-eks-blueprints/patterns/karpenter

tree

versions.tf

terraform {

required_version = ">= 1.3"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 5.34"

}

helm = {

source = "hashicorp/helm"

version = ">= 2.9"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = ">= 2.20"

}

}

# ## Used for end-to-end testing on project; update to suit your needs

# backend "s3" {

# bucket = "terraform-ssp-github-actions-state"

# region = "us-west-2"

# key = "e2e/karpenter/terraform.tfstate"

# }

}

main.tf ← local 블록 수정

provider "aws" {

region = local.region

}

# Required for public ECR where Karpenter artifacts are hosted

provider "aws" {

region = "us-east-1"

alias = "virginia"

}

provider "kubernetes" {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

# This requires the awscli to be installed locally where Terraform is executed

args = ["eks", "get-token", "--cluster-name", module.eks.cluster_name]

}

}

provider "helm" {

kubernetes {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

# This requires the awscli to be installed locally where Terraform is executed

args = ["eks", "get-token", "--cluster-name", module.eks.cluster_name]

}

}

}

data "aws_ecrpublic_authorization_token" "token" {

provider = aws.virginia

}

data "aws_availability_zones" "available" {}

locals {

name = "t101-${basename(path.cwd)}"

region = "ap-northeast-2"

vpc_cidr = "10.10.0.0/16"

azs = slice(data.aws_availability_zones.available.names, 0, 3)

tags = {

Blueprint = local.name

GithubRepo = "github.com/aws-ia/terraform-aws-eks-blueprints"

}

}

################################################################################

# Cluster

################################################################################

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 20.11"

cluster_name = local.name

cluster_version = "1.30"

cluster_endpoint_public_access = true

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

# Fargate profiles use the cluster primary security group so these are not utilized

create_cluster_security_group = false

create_node_security_group = false

enable_cluster_creator_admin_permissions = true

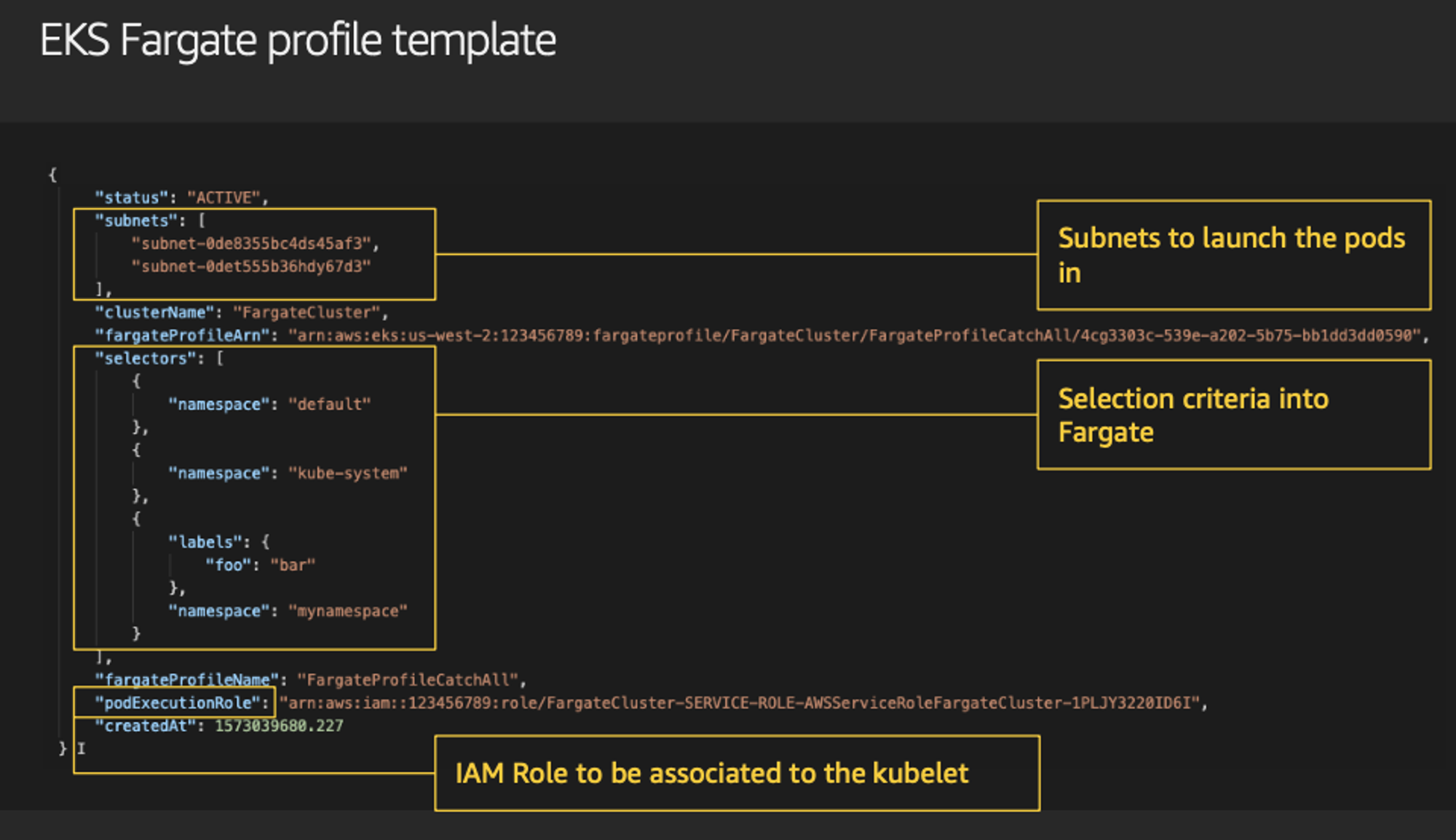

fargate_profiles = {

karpenter = {

selectors = [

{ namespace = "karpenter" }

]

}

kube_system = {

name = "kube-system"

selectors = [

{ namespace = "kube-system" }

]

}

}

tags = merge(local.tags, {

# NOTE - if creating multiple security groups with this module, only tag the

# security group that Karpenter should utilize with the following tag

# (i.e. - at most, only one security group should have this tag in your account)

"karpenter.sh/discovery" = local.name

})

}

################################################################################

# EKS Blueprints Addons

################################################################################

module "eks_blueprints_addons" {

source = "aws-ia/eks-blueprints-addons/aws"

version = "~> 1.16"

cluster_name = module.eks.cluster_name

cluster_endpoint = module.eks.cluster_endpoint

cluster_version = module.eks.cluster_version

oidc_provider_arn = module.eks.oidc_provider_arn

# We want to wait for the Fargate profiles to be deployed first

create_delay_dependencies = [for prof in module.eks.fargate_profiles : prof.fargate_profile_arn]

eks_addons = {

coredns = {

configuration_values = jsonencode({

computeType = "Fargate"

# Ensure that the we fully utilize the minimum amount of resources that are supplied by

# Fargate https://docs.aws.amazon.com/eks/latest/userguide/fargate-pod-configuration.html

# Fargate adds 256 MB to each pod's memory reservation for the required Kubernetes

# components (kubelet, kube-proxy, and containerd). Fargate rounds up to the following

# compute configuration that most closely matches the sum of vCPU and memory requests in

# order to ensure pods always have the resources that they need to run.

resources = {

limits = {

cpu = "0.25"

# We are targeting the smallest Task size of 512Mb, so we subtract 256Mb from the

# request/limit to ensure we can fit within that task

memory = "256M"

}

requests = {

cpu = "0.25"

# We are targeting the smallest Task size of 512Mb, so we subtract 256Mb from the

# request/limit to ensure we can fit within that task

memory = "256M"

}

}

})

}

vpc-cni = {}

kube-proxy = {}

}

enable_karpenter = true

karpenter = {

repository_username = data.aws_ecrpublic_authorization_token.token.user_name

repository_password = data.aws_ecrpublic_authorization_token.token.password

}

karpenter_node = {

# Use static name so that it matches what is defined in `karpenter.yaml` example manifest

iam_role_use_name_prefix = false

}

tags = local.tags

}

resource "aws_eks_access_entry" "karpenter_node_access_entry" {

cluster_name = module.eks.cluster_name

principal_arn = module.eks_blueprints_addons.karpenter.node_iam_role_arn

kubernetes_groups = []

type = "EC2_LINUX"

}

################################################################################

# Supporting Resources

################################################################################

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "~> 5.0"

name = local.name

cidr = local.vpc_cidr

azs = local.azs

private_subnets = [for k, v in local.azs : cidrsubnet(local.vpc_cidr, 4, k)]

public_subnets = [for k, v in local.azs : cidrsubnet(local.vpc_cidr, 8, k + 48)]

enable_nat_gateway = true

single_nat_gateway = true

public_subnet_tags = {

"kubernetes.io/role/elb" = 1

}

private_subnet_tags = {

"kubernetes.io/role/internal-elb" = 1

# Tags subnets for Karpenter auto-discovery

"karpenter.sh/discovery" = local.name

}

tags = local.tags

}

outputs.tf

output "configure_kubectl" {

description = "Configure kubectl: make sure you're logged in with the correct AWS profile and run the following command to update your kubeconfig"

value = "aws eks --region ${local.region} update-kubeconfig --name ${module.eks.cluster_name}"

}

init

terraform init

tree .terraform

cat .terraform/modules/modules.json | jq

tree .terraform/providers/registry.terraform.io/hashicorp -L 2

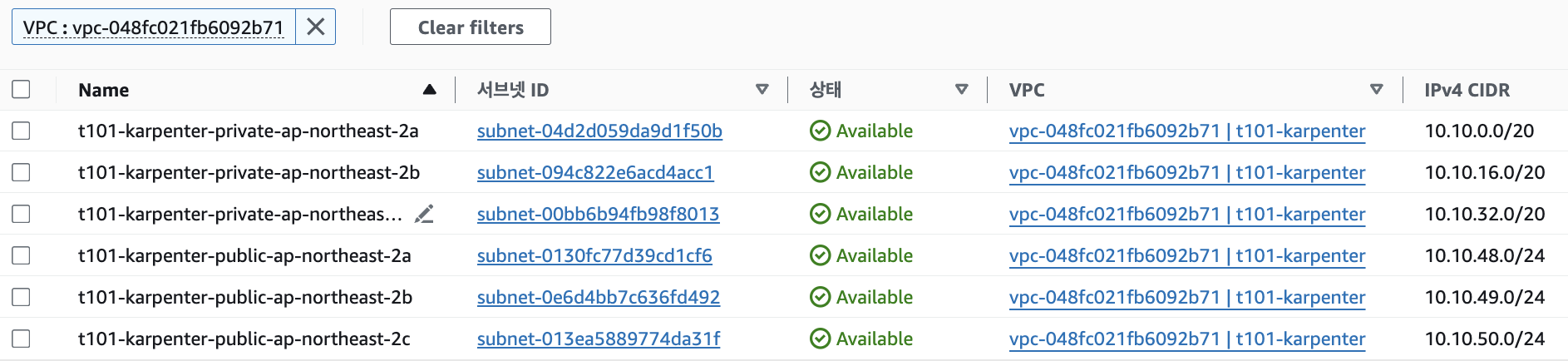

VPC 배포 : 3분 소요

# VPC 정보 확인

aws ec2 describe-vpcs --filter 'Name=isDefault,Values=false' --output yaml

# vpc 배포 : 3분 소요

terraform apply -target="module.vpc" -auto-approve

# 배포 확인

terraform state list

terraform show

...

# VPC 정보 확인

aws ec2 describe-vpcs --filter 'Name=isDefault,Values=false' --output yaml

# 상세 정보 확인

echo "data.aws_availability_zones.available" | terraform console

{

"all_availability_zones" = tobool(null)

"exclude_names" = toset(null) /* of string */

"exclude_zone_ids" = toset(null) /* of string */

"filter" = toset(null) /* of object */

"group_names" = toset([

"ap-northeast-2",

])

"id" = "ap-northeast-2"

"names" = tolist([

"ap-northeast-2a",

"ap-northeast-2b",

"ap-northeast-2c",

"ap-northeast-2d",

])

"state" = tostring(null)

"timeouts" = null /* object */

"zone_ids" = tolist([

"apne2-az1",

"apne2-az2",

"apne2-az3",

"apne2-az4",

])

}

terraform state show 'module.vpc.aws_vpc.this[0]'

VPCID=<각자 자신의 VPC ID>

aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" | jq

aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --output text

# public 서브넷과 private 서브넷 CIDR 확인

## private_subnets = [for k, v in local.azs : cidrsubnet(local.vpc_cidr, 4, k)]

## public_subnets = [for k, v in local.azs : cidrsubnet(local.vpc_cidr, 8, k + 48)]

terraform state show 'module.vpc.aws_subnet.public[0]'

terraform state show 'module.vpc.aws_subnet.private[0]'

확인

EKS 배포 : 13분 소요

# EKS 배포 : 13분 소요

terraform apply -target="module.eks" -auto-approve

# 배포 확인

terraform state list

terraform show

...

terraform output

configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name t101-karpenter"

# EKS 자격증명

## aws eks --region <REGION> update-kubeconfig --name <CLUSTER_NAME> --alias <CLUSTER_NAME>

aws eks --region ap-northeast-2 update-kubeconfig --name t101-karpenter

cat ~/.kube/config

# (참고) context name 변경

kubectl config rename-context "arn:aws:eks:ap-northeast-2:$(aws sts get-caller-identity --query 'Account' --output text):cluster/t101-karpenter" "T101-Lab"

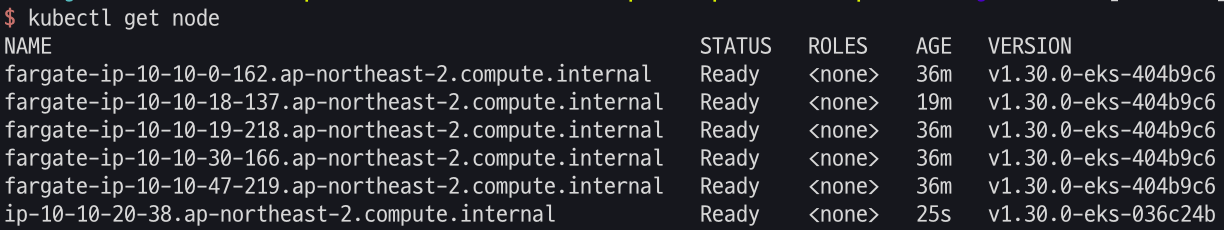

# k8s 노드, 파드 정보 확인

kubectl cluster-info

kubectl get node

kubectl get pod -A

# 상세 정보 확인

terraform state list

terraform state show 'module.eks.data.aws_caller_identity.current'

terraform state show 'module.eks.data.aws_iam_session_context.current'

terraform state show 'module.eks.aws_eks_cluster.this[0]'

terraform state show 'module.eks.data.tls_certificate.this[0]'

terraform state show 'module.eks.aws_cloudwatch_log_group.this[0]'

terraform state show 'module.eks.aws_eks_access_entry.this["cluster_creator"]'

terraform state show 'module.eks.aws_iam_openid_connect_provider.oidc_provider[0]'

terraform state show 'module.eks.data.aws_partition.current'

terraform state show 'module.eks.aws_iam_policy.cluster_encryption[0]'

terraform state show 'module.eks.aws_iam_role.this[0]'

terraform state show 'module.eks.time_sleep.this[0]'

terraform state show 'module.eks.module.kms.aws_kms_key.this[0]'

terraform state show 'module.eks.module.fargate_profile["kube_system"].aws_eks_fargate_profile.this[0]'

terraform state show 'module.eks.module.fargate_profile["karpenter"].aws_eks_fargate_profile.this[0]'

확인

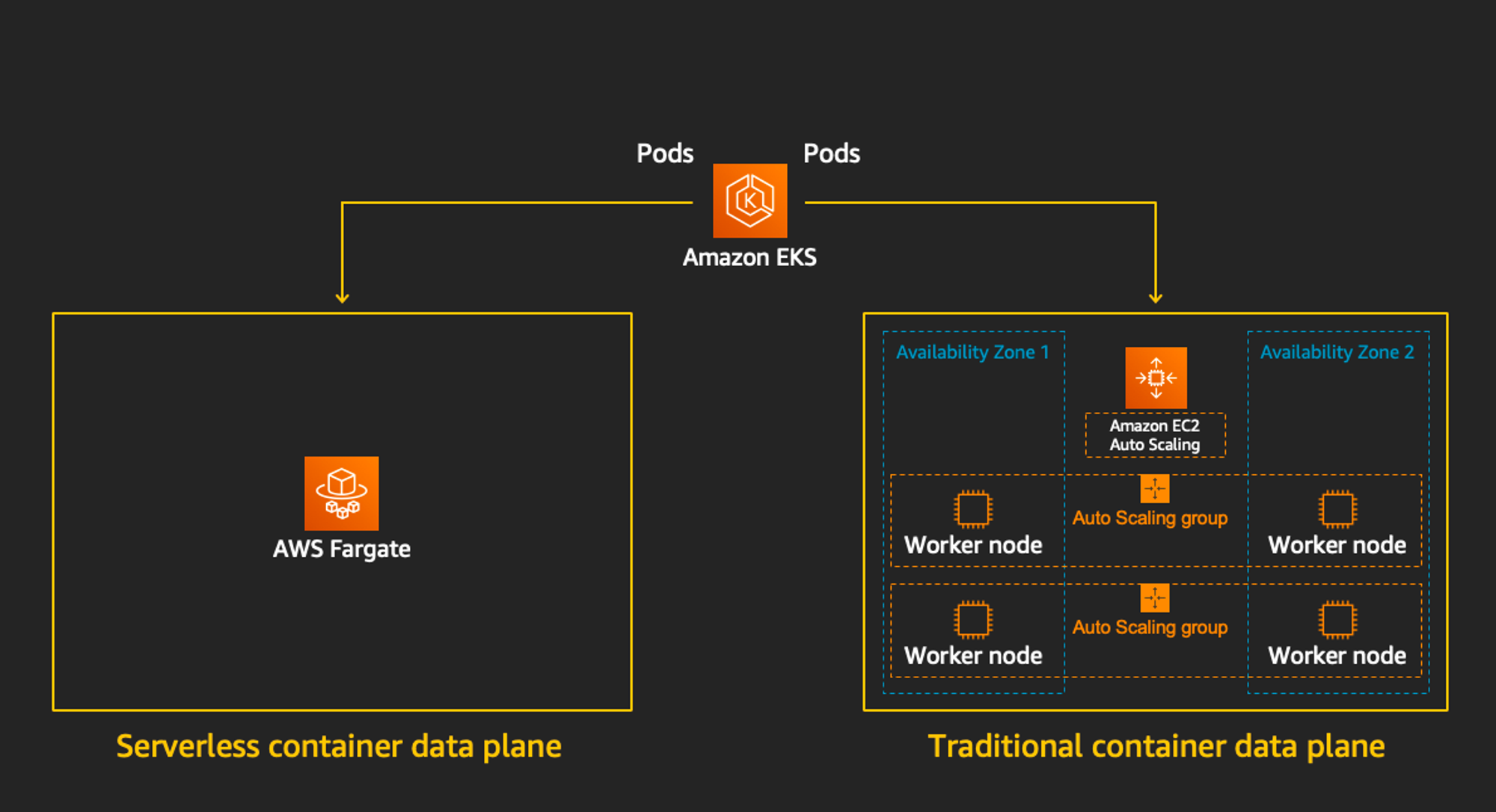

Fargate 소개 : EKS(컨트롤 플레인) + Fargate(데이터 플레인)의 완전한 서버리스화(=AWS 관리형)

Cluster Autoscaler 불필요, VM 수준의 격리 가능(VM isolation at Pod Level)

파게이트 프로파일(파드가 사용할 서브넷, 네임스페이스, 레이블 조건)을 생성하여 지정한 파드가 파게이트에서 동작하게 함

EKS 는 스케줄러가 특정 조건을 기준으로 어느 노드에 파드를 동작시킬지 결정, 혹은 특정 설정으로 특정 노드에 파드가 동작하게 가능함

Data Plane

addon 배포 & karpenter helm 배포 : 2분 소요

# 배포 : 2분 소요

terraform apply -auto-approve

# 확인

terraform state list

terraform show

...

# k8s 클러스터, 노드, 파드 정보 확인

kubectl cluster-info

kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

fargate-ip-10-10-36-94.ap-northeast-2.compute.internal Ready <none> 10m v1.30.0-eks-404b9c6 10.10.36.94 <none> Amazon Linux 2 5.10.219-208.866.amzn2.x86_64 containerd://1.7.11

fargate-ip-10-10-4-201.ap-northeast-2.compute.internal Ready <none> 10m v1.30.0-eks-404b9c6 10.10.4.201 <none> Amazon Linux 2 5.10.219-208.866.amzn2.x86_64 containerd://1.7.11

fargate-ip-10-10-43-93.ap-northeast-2.compute.internal Ready <none> 10m v1.30.0-eks-404b9c6 10.10.43.93 <none> Amazon Linux 2 5.10.219-208.866.amzn2.x86_64 containerd://1.7.11

fargate-ip-10-10-46-178.ap-northeast-2.compute.internal Ready <none> 10m v1.30.0-eks-404b9c6 10.10.46.178 <none> Amazon Linux 2 5.10.219-208.866.amzn2.x86_64 containerd://1.7.11

kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

karpenter karpenter-6b8687f5db-r9b7q 1/1 Running 0 12m 10.10.36.94 fargate-ip-10-10-36-94.ap-northeast-2.compute.internal <none> <none>

karpenter karpenter-6b8687f5db-v8zwb 1/1 Running 0 12m 10.10.46.178 fargate-ip-10-10-46-178.ap-northeast-2.compute.internal <none> <none>

kube-system coredns-86dcddd859-x9zp8 1/1 Running 0 12m 10.10.4.201 fargate-ip-10-10-4-201.ap-northeast-2.compute.internal <none> <none>

kube-system coredns-86dcddd859-xxk97 1/1 Running 0 12m 10.10.43.93 fargate-ip-10-10-43-93.ap-northeast-2.compute.internal <none> <none>

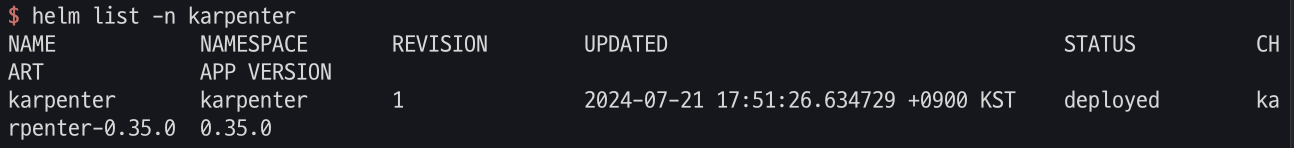

# helm chart 확인

helm list -n karpenter

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

karpenter karpenter 1 2024-07-20 23:34:26.74931 +0900 KST deployed karpenter-0.35.00.35.0

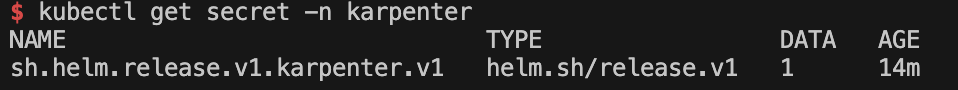

# 시크릿 확인 : kms로 암호 처리됨 - Encrypt Kubernetes secrets with AWS KMS on existing clusters

## Symmetric, Can encrypt and decrypt data , Created in the same AWS Region as the cluster

## Warning - You can't disable secrets encryption after enabling it. This action is irreversible.

kubectl get secret -n karpenter

kubectl get secret -n karpenter sh.helm.release.v1.karpenter.v1 -o json | jq

# 상세 정보 확인

terraform state list

terraform state show 'data.aws_ecrpublic_authorization_token.token'

terraform state show 'aws_eks_access_entry.karpenter_node_access_entry'

terraform state show 'module.eks_blueprints_addons.data.aws_caller_identity.current'

terraform state show 'module.eks_blueprints_addons.data.aws_eks_addon_version.this["coredns"]'

terraform state show 'module.eks_blueprints_addons.aws_cloudwatch_event_rule.karpenter["health_event"]'

terraform state show 'module.eks_blueprints_addons.aws_cloudwatch_event_target.karpenter["health_event"]'

terraform state show 'module.eks_blueprints_addons.aws_eks_addon.this["coredns"]'

terraform state show 'module.eks_blueprints_addons.aws_iam_role.karpenter[0]'

terraform state show 'module.eks_blueprints_addons.aws_iam_instance_profile.karpenter[0]'

terraform state show 'module.eks_blueprints_addons.module.karpenter.data.aws_iam_policy_document.this[0]'

terraform state show 'module.eks_blueprints_addons.module.karpenter.data.aws_iam_policy_document.assume[0]'

terraform state show 'module.eks_blueprints_addons.module.karpenter.aws_iam_policy.this[0]'

terraform state show 'module.eks_blueprints_addons.module.karpenter.helm_release.this[0]'

terraform state show 'module.eks_blueprints_addons.module.karpenter_sqs.aws_sqs_queue.this[0]'

terraform state show 'module.eks_blueprints_addons.module.karpenter_sqs.aws_sqs_queue_policy.this[0]'

확인

옵션) eks-node-viewer 설치 : 노드 할당 가능 용량과 요청 request 리소스 표시, 실제 파드 리소스 사용량 X - Github

GitHub - awslabs/eks-node-viewer: EKS Node Viewer

EKS Node Viewer. Contribute to awslabs/eks-node-viewer development by creating an account on GitHub.

github.com

# macOS

brew tap aws/tap

brew install eks-node-viewer

# Windows/Linux Manual

## go 설치

go version

## EKS Node Viewer 설치 : 약 2분 이상 소요

go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest# [신규 터미널] Display both CPU and Memory Usage*

eks-node-viewer --resources cpu,memory

# Standard usage

eks-node-viewer

# Karpenter nodes only

eks-node-viewer --node-selector karpenter.sh/nodepool

# Display extra labels, i.e. AZ

eks-node-viewer --extra-labels topology.kubernetes.io/zone

# Sort by CPU usage in descending order

eks-node-viewer --node-sort=eks-node-viewer/node-cpu-usage=dsc

# Specify a particular AWS profile and region

AWS_PROFILE=myprofile AWS_REGION=us-west-2

kube-ops-view 설치 : 노드의 파드 상태 정보를 웹 페이지에서 실시간으로 출력 - 링크

# helm 배포

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

# 포트 포워딩

kubectl port-forward deployment/kube-ops-view -n kube-system 8080:8080 &

# 접속 주소 확인 : 각각 1배, 1.5배, 3배 크기

echo -e "KUBE-OPS-VIEW URL = http://localhost:8080"

echo -e "KUBE-OPS-VIEW URL = http://localhost:8080/#scale=1.5"

echo -e "KUBE-OPS-VIEW URL = http://localhost:8080/#scale=3"

#

helm uninstall kube-ops-view -n kube-system

karpenter 소개 : 노드 수명 주기 관리 솔루션, 몇 초 만에 컴퓨팅 리소스 제공 - https://ec2spotworkshops.com/karpenter.html

karpenter.yaml ← 수정 필요

---

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

metadata:

name: default

spec:

amiFamily: AL2

role: karpenter-t101-karpenter

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: t101-karpenter

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: t101-karpenter

tags:

karpenter.sh/discovery: t101-karpenter

---

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

nodeClassRef:

name: default

requirements:

- key: "karpenter.k8s.aws/instance-category"

operator: In

values: ["c", "m", "r"]

- key: "karpenter.k8s.aws/instance-cpu"

operator: In

values: ["4", "8", "16", "32"]

- key: "karpenter.k8s.aws/instance-hypervisor"

operator: In

values: ["nitro"]

- key: "karpenter.k8s.aws/instance-generation"

operator: Gt

values: ["2"]

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenEmpty

consolidateAfter: 30s

example.yaml

piVersion: apps/v1

kind: Deployment

metadata:

name: inflate

spec:

replicas: 0

selector:

matchLabels:

app: inflate

template:

metadata:

labels:

app: inflate

spec:

terminationGracePeriodSeconds: 0

containers:

- name: inflate

image: public.ecr.aws/eks-distro/kubernetes/pause:3.7

resources:

requests:

cpu: 1# Provision the Karpenter EC2NodeClass and NodePool resources which provide Karpenter the necessary configurations to provision EC2 resources:

kubectl apply -f karpenter.yaml

# 확인

kubectl get ec2nodeclass,nodepool

NAME AGE

ec2nodeclass.karpenter.k8s.aws/default 31s

NAME NODECLASS

nodepool.karpenter.sh/default default

# Once the Karpenter resources are in place, Karpenter will provision the necessary EC2 resources to satisfy any pending pods in the scheduler's queue. You can demonstrate this with the example deployment provided.

# First deploy the example deployment which has the initial number replicas set to 0:

kubectl apply -f example.yaml

kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

inflate 0/0 0 0 42s

# (옵션) 신규 터미널 : karpenter 컨트롤러 로그 확인

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

# When you scale the example deployment, you should see Karpenter respond by quickly provisioning EC2 resources to satisfy those pending pod requests:

kubectl scale deployment inflate --replicas=3 && kubectl get pod -w

확인

삭제

# kube-ops-view 삭제

helm uninstall kube-ops-view -n kube-system

# addon & karpenter helm 삭제 : 1분 소요

terraform destroy -target="module.eks_blueprints_addons" -auto-approve

# EKS 삭제 : 8분 소요

terraform destroy -target="module.eks" -auto-approve

# VPC 삭제 : vpc 삭제가 잘 안될 경우 aws 콘솔에서 vpc 수동 삭제 -> vnic 등 남아 있을 경우 해당 vnic 강제 삭제

terraform destroy -auto-approve

# VPC 삭제 확인

aws ec2 describe-vpcs --filter 'Name=isDefault,Values=false' --output yaml

# kubeconfig 삭제

rm -rf ~/.kube/config

'study > T101 4기' 카테고리의 다른 글

| T101 4기 8주차 첫번째 (2) | 2024.08.02 |

|---|---|

| T101 4기 7주차 두번째 (0) | 2024.07.27 |

| T101 4기 5주차 두번째 (2) | 2024.07.07 |

| T101 4기 5주차 첫번째 (1) | 2024.07.07 |

| T101 4기 4주차 두번째 (1) | 2024.07.06 |